Collaborative Augmented Assembly: Task Distribution in Human-Robot Collaborative Assembly with Phone-based Augmented Reality

Workshop edit – video production by 6ix Films (Toronto, CA)

Engaged participants in cooperative, augmented human robot design to assembly workflows employing shared vision, sequencing, and feedback loops.

WORKSHOP LEADERS

Daniela Mitterberger

Princeton University, School of Architecture. ETH Zürich, Design++, Gramazio Kohler Research

Lidia Atanasova

Technical University of Munich, TUM School of Engineering and Design, Department of Architecture

Kathrin Dörfler

Technical University of Munich, TUM School of Engineering and Design, Department of Architecture

Human-in-the-loop design and production chains have the potential to leverage machine intelligence while incorporating a holistic engagement of designers and fabricators. Harnessing the capabilities of humans within robotic fabrication processes thus holds substantial potential to enhance versatility, robustness, and productivity, particularly in the context of on-site construction.

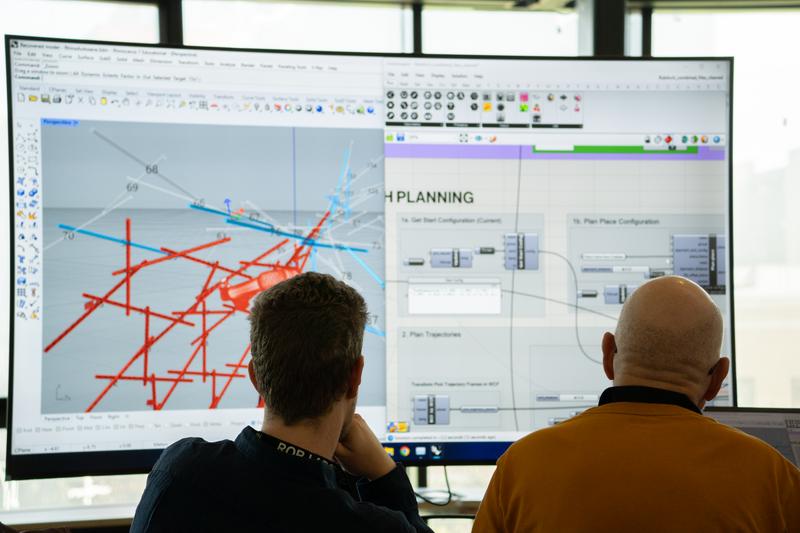

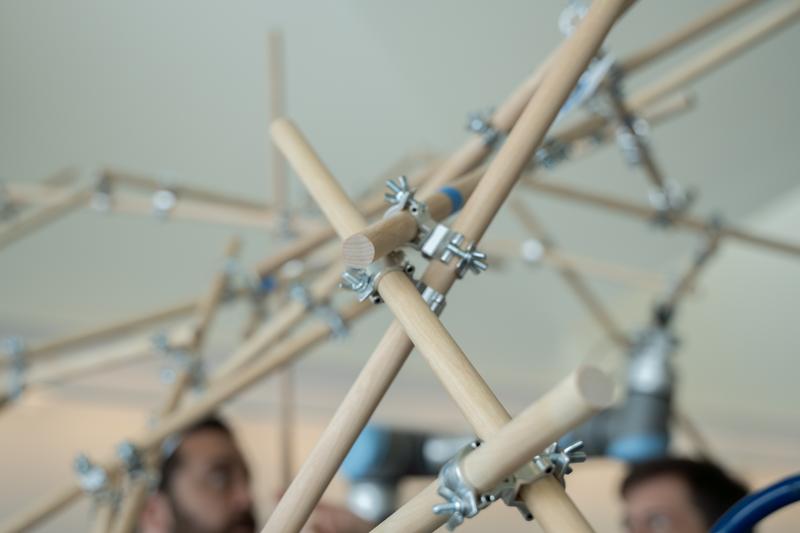

As part of this workshop, participants had the chance to learn about and engaged in a cooperative, augmented human‑robot design‑to‑assembly workflow, where humans and robots joined forces in synergy, enabling the creation of a complex timber structure unattainable through either’s efforts alone.

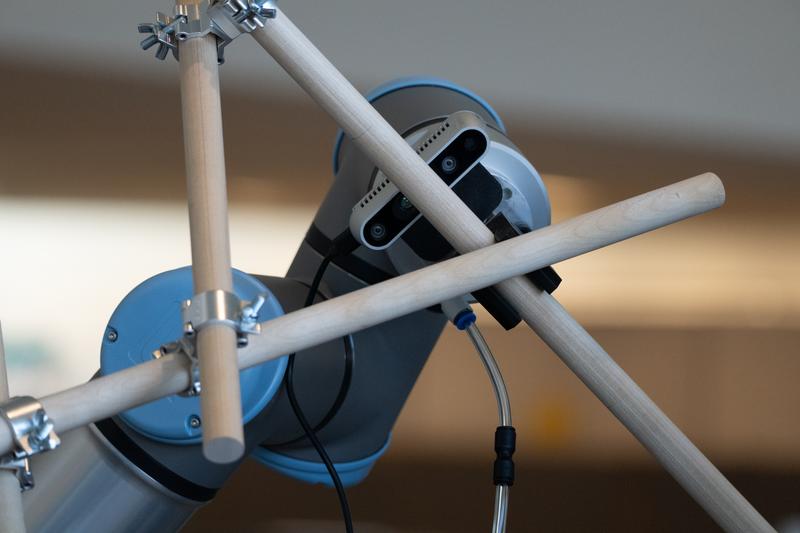

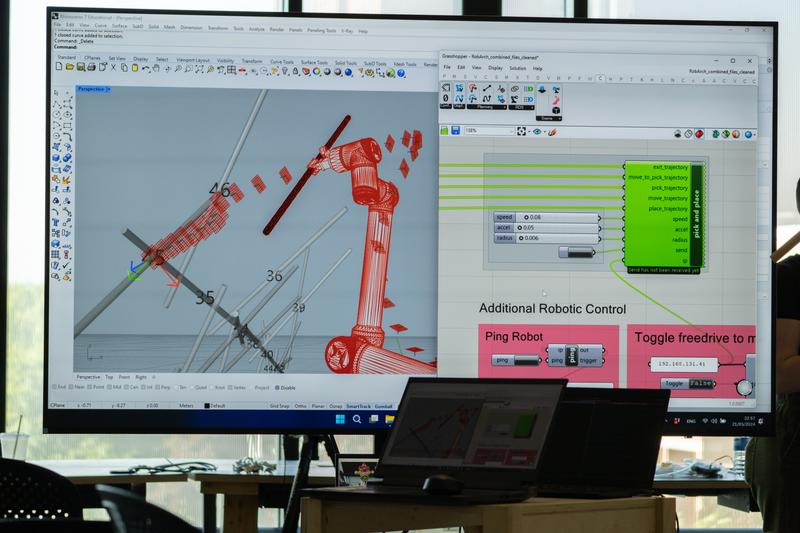

Initially, a digital design tool guided by human input was used to create the timber assembly’s information model, considering fabrication constraints, structural stability, and the distribution of tasks between humans and robots, ensuring structural integrity and fabricability at each assembly step. During the augmented assembly, two mobile robots precisely positioned timber members and occasionally provided temporary support at critical points in the structure. Human participants played an essential role in manually closing the reciprocal frames and adding mechanical connectors. Throughout the process, humans and robots shared a digital‑physical workspace, with humans receiving task instructions via a mobile AR interface that leveraged novel COMPAS XR features.